Cautions Against AI Automatic Redlining: Top 3 Reasons

Be cautious with AI-driven contract redlining; understand its limitations to avoid inefficiencies and ensure effective legal processes.

Artificial intelligence (AI) used to be the subject of science fiction movies that took place in a distant future. Today, it’s a normal part of our lives. AI is incorporated in everything from unlocking our iPhones to turning on the lights when we get home to driving us around Las Vegas. We’re on the cusp of an AI revolution, which is exciting on a lot of levels.

Along with impacting us personally, AI has the potential to revolutionize business, especially in companies that are driven by marketing and sales. AI is quickly expanding to encompass all types of industries, including legal tech. For instance, replacing inefficient human labor that performs mundane and repetitive tasks with automated machine learning technology can help law firms and corporate legal departments save money and get more done. AI for contracts has the potential to cut down on editing time and help teams put their employees to use in more constructive and important ways.

However, it’s easy to get swept up in the excitement and forget that there’s no substitute, today, for human emotions, thought, and reasoning when it comes to certain tasks.

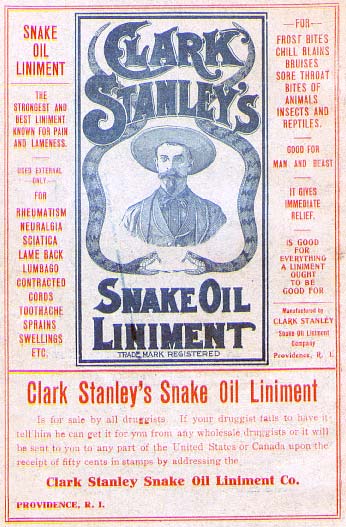

Setting aside open questions regarding the ethics of AI and attorney work-product, and before businesses get too caught up in the excitement of AI technology and spend money on AI software, it’s important for companies to understand the practical realities of implementing AI in legal processes. It’s also important to know how to tell which companies are selling software designed to legitimately help your business grow and succeed and which companies are selling the modern-day equivalent of snake oil for the sake of profit at the expense of customers who are unable (or unwilling) to consider what’s inside the bottle.

How to recognize AI snake oil

Princeton University professor Arvind Narayanan recently called a lot of modern AI companies “snake oil.” We won’t get into the whole history of the term “snake oil” here (though it’s worth reading when you have the time). The main comparison here between AI software and snake oil is that the two are marketed as a cure-all for an underlying problem but cannot deliver on their promises.

Let’s consider how AI software is hurting businesses by making promises that it can’t deliver with an example we can all recognize and relate to—HR recruiting software.

Example of AI snake oil: HR recruitment software

Recruitment software is an important place to start a discussion of AI snake oil because it directly impacts every aspect of every business. Employers or HR managers probably know that recruiting software can help make great hires. It can also have the opposite impact by hiding potentially all-star employees from view based on a vague algorithm, or worse, making awfully bad assumptions about potential candidates.

Many companies use AI recruiting and HR software to save time and help departments find employees based on characteristics that a company values. Much of the AI recruiting software on the market today require employees to submit a video. The AI software reviews the video using an algorithm to rate and score candidates. It then shares that data with the employer.

At first, this might seem great. After all, who wants to watch hundreds of videos from potential job candidates? Automated software should cut down on time and allow employers to only focus on videos from people who have the skills and cultural fit the employer is looking for, right?

Well, theoretically, yes. Having a machine filter through hundreds of applicants to only deliver the top candidates for your review sounds like a good way to save time and resources. In practice, though, the results are very different.

Instead of scanning videos to determine who’s qualified for the position, the AI software observes the candidate’s voice, gestures, and body language. It doesn’t consider important things like qualifications and skills but rather focuses solely on how the person is presented in the video. Based on this information, the software then generates a random number based on an algorithm that’s not concerned with qualifications. Employers often don’t ever see the videos of candidates who have all the necessary qualifications but don’t meet the randomly set standards of the AI algorithm.

As researchers from Cornell University state, “While there is evidence for the predictive validity of alternative assessments, empirical correlation is no substitute for theoretical justification.” AI technology doesn’t consider why a candidate has a particular speech pattern or makes particular gestures. It simply ingests the information, turns it into numerical data, and creates a somewhat abstract and arbitrary rating system for employers to review.

Employers who rely solely on a machine to tell them which candidates are the most suitable for a position risk losing out on great hires. The impact of making bad hires that are based on algorithms instead of personal interaction is going to be far-reaching and has the potential to disrupt how companies operate for years to come. This example is useful for understanding and considering how AI technology can impact other professions and tasks, such as contract negotiations. It’s also important to understand the limitations of AI technology when you’re investing in contract AI for your legal team.

What our customers found when they tested pure AI-based mark-ups

There’s a growing list of companies out there that promise automatic redlining, AI negotiation, and/or promised turnaround time where a consultant marks up the contract for you (side note – is that really AI?). Take caution. The risks for error are high enough that you’ll still need a human to review your contracts, which might mean you end up spending just as much (if not more). You also risk missing out on important details.

Here are three practical realities to consider if you’re looking at automatic redlining using AI.

1. Automated redline features need significant data that you probably don’t have, which means you need human review anyway

Our customers found very few scenarios where either their company had a pristine data set or their contracts had a cookie-cutter method of editing key sections automatically or responding to edits. There will always be edge cases, negotiation context, leverage considerations, and human touch. Certain industries also silo contract negotiation data, which further exacerbates the problem.

This is especially relevant when the contract you’re redlining is missing important sections. If a provision is missing, where is it inserted? Before the Misc. section? What if the Misc. section is not neatly defined at the end of the contract? As a recent client of ours pointed out, what if a counter party adds an odd provision, “Co. shall buy a gold-plated golf cart at the end of the term?”

Here are some other examples where relying on automatic redlines/negotiations have failed our tests:

- Defined terms

- Cross references

- Local law considerations

- Commodity prices

- Changing economic conditions

- Seasonal pressures (e.g., fiscal budgets or quarterly quotas)

- Surgical redlines

- Commenting

- Business provisions impacting tax, accounting, and insurance

These areas will not be analyzed, corrected, or automated by a machine anytime soon. They require a human who understands the intricacies of contract negotiations in order to make sure all sections are correct within the context of the transaction and leverage between the parties.

While contracts generally have an orderly structure, each company you work with has a unique approach to a contract (i.e., third party paper). Thus, the first expectation to set when you work with AI for your contract redlining is that it will never be correct out of the box with public or "black box" data. Even if the process involves your data, expect algorithm training to be expensive and time-consuming in the short-term.

2. You can’t reliably automate high-risk, low volume contracts

By the very definition of high-risk, low volume contracts, there will be fewer examples of these transactions for your AI program to draw from. This translates to fewer data points and opportunities to create machine learning models.

Further, high-risk contracts are longer and invariably contain more nuances that are simply too difficult to address through AI. The risks of inaccuracies are incredibly high. In testing 50+ page construction contracts or industry-specific master service agreements (with related exhibits), we found a laundry-list of problems ranging from scope specific inaccuracies to incorrectly analyzed paragraphs.

Think about some current examples of new technologies that incorporate AI and the issues they have encountered. Would you expect Tesla's self-driving technology to work anywhere in the world on any terrain without any context? If Las Vegas’s self-driving bus’ first day accident is any indication, then no, we can’t expect self-driving technology to work out of the box. Even Apple’s Face ID, which is supposed to be the most advanced security measure on cell phones, was cracked within days of its release with a simple $150 mask that Vietnamese hackers created.

We can learn lessons from these high-profile examples of how AI technology doesn’t have all the data it needs to successfully navigate unpredictable scenarios. As a result, most automated contract systems will mark up the parts that are not that important to the reviewer.

When reviewing contracts, the reviewer is concerned with concepts and, therefore, wants to use language that’s as clear as possible. To reconcile, some AI software is more concerned with non-authoritative rules and might change “will” to “shall” or use words that, while correct, do not fit within the context of the contract and obscure meaning. Using incorrect punctuation and less than ideal words does not help the reviewer address the most important concepts that are unique to the transaction.

3. Fixing redlines in Word is even harder than just doing the review yourself.

We’ve already talked about how problematic redlining in Word can be. It can be made ten times worse with contract AI that does more harm than good.

Imagine a technology that incorrectly deletes words and adds text throughout a 100-word paragraph. If these edits are incorrect, the amount of time you or someone in your team will need to spend fixing the automatic redlines will take more time and effort to fix than it would be to just to do it right, from the beginning, using a series of guidelines and historical precedent.

On top of this, imagine the track changes nightmare that will ensue as you try to navigate the redlined text. In some cases, you might even have to read the language TWICE. For example, you may have to undo the automatic insert, re-add the deleted words, manage the track changes, and remove authorship from metadata. Then, you’ll need to re-read the document to make sure it’s correct. You may even need to bring in a second person to re-read the document to make sure it’s accurate and makes sense.

You might be thinking that this type of editing scenario would only happen if your AI doesn’t recognize your company’s algorithms. However, even after your AI contract software understands your algorithms, you’ll still need a human to review your automated redlines. Depending on how accurate the redlines are, this could either save you time (i.e. it could take a human 30 minutes instead of all day). Or, it could end up taking even more time if the redlines are inaccurate or the person reviewing them has to constantly check them.

Finally, let’s assume the first turn is perfectly prepared by a fully automated system. Still, many negotiations progress to the second or third turn. The problem is even more straight forward – you’re now stepping into something that you didn’t prepare the first time around.

A better approach to contract review automation

At DocJuris, we believe that empowerment and ai contract review software go together. We also understand the need and desire for a software program that can improve efficiency and allow your team to spend their time doing functions that cannot be easily automated.

That’s the beauty of DocJuris.

Rather than attempting to replace your team altogether, our platform empowers your team. In today's technology environment, it’s important to recognize ways in which human work can be replaced and the ways in which it’s necessary.

The future is bright, but never assume that the lack of data and plethora edge cases of today can be overcome with a magic wand. At DocJuris, we take a practitioner-focused approach, where we provide practical tools and great user experience to help you achieve better ROI.

***

Book a demo with DocJuris today to see how our redlining platform can simplify your contract negotiations.

Related Articles

Get a free demo

See how DocJuris can automate your legal, procurement, and sales operations.

Contract review from 8 weeks to 5 minutes

Mitigate risk faster with dynamic playbooks

Become a valued partner

.avif)

.jpeg)